AICA INSIGHTS

Discover the latest from AICA — from product news and robotics insights to event highlights. Explore what we’re building, thinking, and sharing.

Deep Reinforcement Learning: A New Frontier for Robotic Arms

In recent years, deep RL has emerged as a game-changer in robotic control. Instead of programming a robot arm’s motions line by line, we define a goal (through a reward function) and allow the robot to figure out how to achieve it through trial and error. It’s akin to how one might teach a child a new skill: provide feedback on good vs. bad outcomes and let them improve over many attempts. By running countless simulated trials, a deep neural network controller can gradually discover an effective strategy, whether it’s balancing a two-legged robot, picking up a delicate object, or precisely inserting a peg in a hole, all without a human writing those instructions in code directly.

One recurring challenge is the notorious sim-to-real gap, the gulf between the perfect, convenient world of a simulator and the messy complexities of reality. A policy that performs flawlessly in simulation can still fail in the real world... To close it, engineers enhance simulator realism by tuning physics parameters and use techniques like domain randomization and actuator dynamics modelling to train policies that can handle real-world unpredictability.

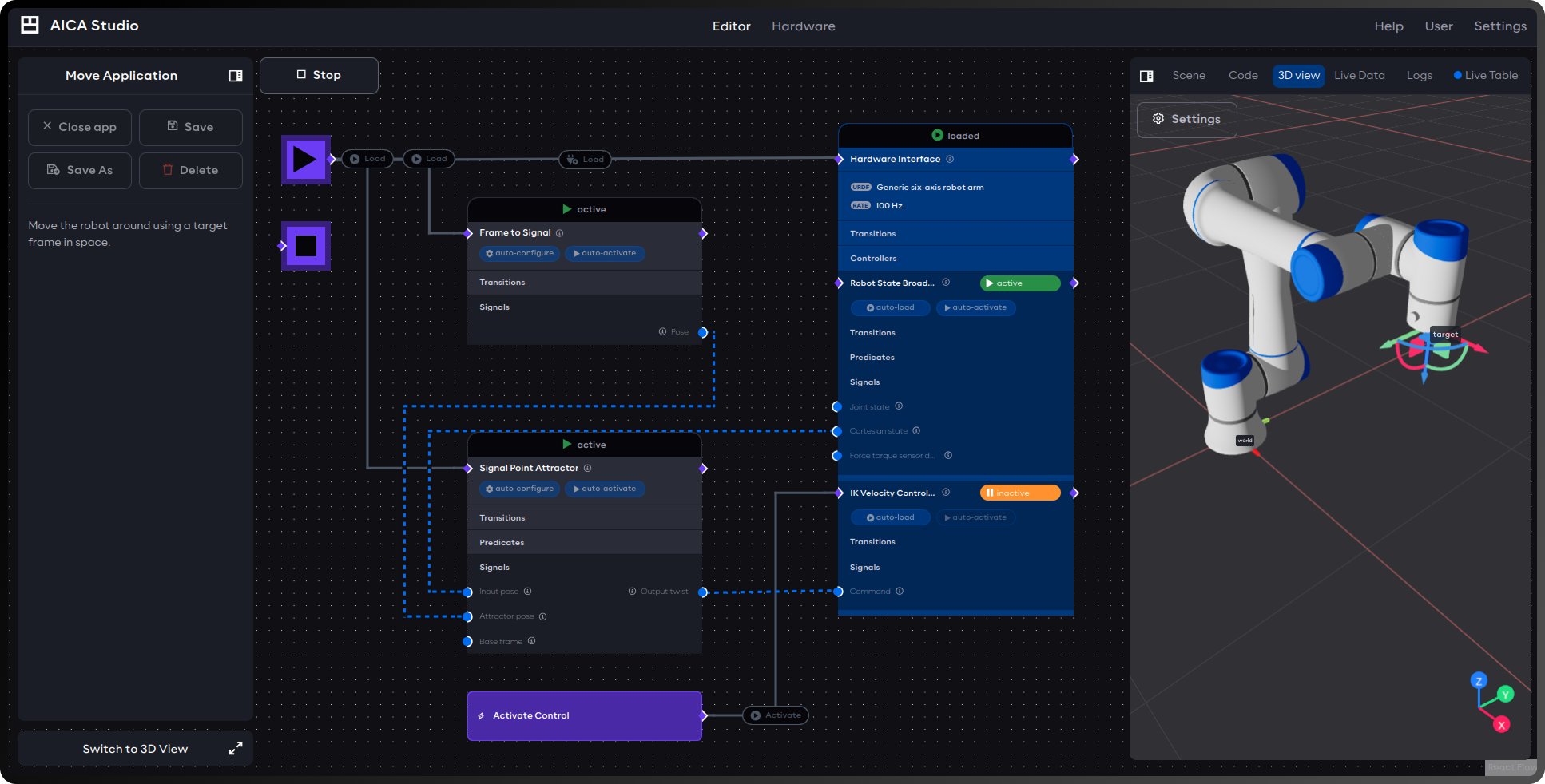

AICA is a prime example of this much-needed new generation of robotic platforms. Our innovative framework seamlessly integrates deep reinforcement learning (RL), classical control, and learning from demonstration within a plug-and-play architecture that empowers engineers to build smarter, more adaptive systems. With these capabilities, AICA transforms RL policy deployment into a seamless experience, combining event-driven execution with valuable tools that bridge the sim-to-real gap.